The AI Race

Reading Time:

34 minutes

The race to AGI is ideological, and will drive us to the exact dangers it claims to avoid

The current situation of AI development is paradoxical:

Humanity is on a default path toward increasingly powerful AI that risks causing our extinction, which key actors acknowledge.

Those same major actors are racing towards this future with abandon; expert discourse is filled with pseudoscientific reassurances that discount the core issues; policy approaches fail to bind any of these actors to a safe future; and safety efforts assume that we will “muddle through” without putting in the necessary effort.

To make sense of this apparent contradiction, we must take a closer look at exactly how the AGI race is unfolding: Who are the participants? What are their motivations? What dynamics emerge from their beliefs and interactions? How does this translate to concrete actions, and how to make sense of these actions? Only by doing this can we understand where the AGI race is headed.

In The AGI race is ideologically driven, we sort the key actors by their ideology, explaining that the history of the race to AGI is rooted in “singularitarian” beliefs about using superintelligence to control the future.

In These ideologies shape the playing field, we argue that the belief that whoever controls AGI controls the future leads to fear that the “wrong people” will end up building AGI first, leading to a race dominated by actors who are willing to neglect any risk or issue that might slow them down.

In The strategies being used to justify and perpetuate the race to AGI are not new, we argue that in order to reach their goals of building AGI first, the AGI companies and their allies are simply applying the usual industry playbook strategies from Tobacco, Oil, Big Tech, to create confusion and fear they then use to wrestle control of the policy and scientific establishment. That way, there is nothing in their way as they race with abandon to AGI.

In How will this go?, we argue that the race, driven by ideologies that want to build AGI, will continue. There is no reason for any of the actors to change their tack, especially given their current success..

Studying the motivations of the participants clarifies their interactions and the dynamics of the race.

Utopists, who are the main drivers of the race, and want to build AGI in order to control the future and usher in the utopia they want.

Big Tech, who who started by supporting the utopists, are now in the process of absorbing and consuming them to keep their technological monopolies.

Accelerationists, who want to accelerate and deregulate technological progress because they think it is an unmitigated good.

Zealots, who want to build AGI and superintelligence because they believe it’s the superior species that should control the future.

Opportunists, who just follow the hype without having any strong belief about it.

Utopists: Building AGI to usher in utopia

This group is the main driver of the AGI race, and are actively pushing for development in order to build their vision of utopia. AGI companies include DeepMind, OpenAI, Anthropic, and xAI (and Meta, though we class them more as accelerationists below). A second, overlapping cluster of utopists support the “entente strategy,” including influential members of the philanthropic foundation Open Philanthropy and think tank RAND, as well as leadership from AGI companies, like Sam Altman and Dario Amodei.

The ideology that binds these actors together is the belief that AGI promises absolute power over the future to whoever builds it, and the desire to wield that power so they can usher in their favorite flavor of utopia.

AGI companies

Companies usually come together in order to make profit: building a new technology is often a means to make money, not the goal itself. But in the case of AGI, the opposite is true. AGI companies have been created explicitly to build AGI, and use products and money as a way to further this goal. It is clear from their histories and from their explicit statements about why AGI matters that this is the case.

All AGI companies have their roots in Singularitarianism, a turn-of-the-century online movement which focused on building AGI and reaping the benefits.

Before singularitarians, AGI and its potential power and implications were mostly discussed in papers by scientific luminaries:

Alan Turing warned in 1951 that

"once the machine thinking method had started, it would not take long to outstrip our feeble powers… at some stage therefore we should have to expect the machines to take control."

Later in the 1950’s John von Neumann discussed that

"the ever accelerating progress of technology… give the appearance of approaching some essential singularity in the history of the race beyond which human affairs, as we know them, could not continue."

In the 1960s, mathematician I. J. Good introduced the idea of AI "intelligence explosion" in which advanced AI improves itself to superhuman levels, similar to what is described in Section 3 on AI Catastrophe.

"Singularitarians are the partisans of the Singularity.

A Singularitarian is someone who believes that technologically creating a greater-than-human intelligence is desirable, and who works to that end.

A Singularitarian is advocate, agent, defender, and friend of the future known as the Singularity."

In one way or another, each of the utopists emerged from the Singularitarians.

"In recent years, Legg had joined an annual gathering of futurists called the Singularity Summit. “The Singularity” is the (theoretical) moment when technology improves to the point where it can no longer be controlled by the human race. [...] One of the founders was a self-educated philosopher and self-described artificial intelligence researcher named Eliezer Yudkowsky, who had introduced Legg to the idea of superintelligence in the early 2000s when they were working with a New York–based start-up called Intelligensis. But Hassabis and Legg had their eyes on one of the other [Singularity Summit] conference founders: Peter Thiel.

In the summer of 2010, Hassabis and Legg arranged to address the Singularity Summit, knowing that each speaker would be invited to a private party at Thiel’s town house in San Francisco."

Demis Hassabis soon converted Elon Musk to the potential and dangers of AGI and secured additional funding from him, by highlighting how Musk’s dream of colonizing Mars could be jeopardized by AGI. The New York Times reports that:

"Mr. Musk explained that his plan was to colonize Mars to escape overpopulation and other dangers on Earth. Dr. Hassabis replied that the plan would work — so long as superintelligent machines didn’t follow and destroy humanity on Mars, too.

Mr. Musk was speechless. He hadn’t thought about that particular danger. Mr. Musk soon invested in DeepMind alongside Mr. Thiel so he could be closer to the creation of this technology."

In 2015, Google acquired DeepMind, which liquidated Elon Musk from the company. Musk’s disagreements with Larry Page over building AGI for “religious” reasons (discussed further in the zealots subsection) pushed Musk to join forces with Sam Altman and launch OpenAI, as discussed in released emails.

In 2021, history repeated itself: Anthropic was founded to compete with OpenAI in response to the latter’s deal with Microsoft. The founders of Anthropic included brother and sister Dario and Daniela Amodei. Dario was one of the researchers invited to the dinner that led to the founding of OpenAI, as noted by co-founder Greg Brockman in a since-deleted blog post:

And in two blog posts entitled “Machine Intelligence”, Sam Altman thanks Dario Amodei specifically for helping him come to grips with questions related to AGI:

"Thanks to Dario Amodei (especially Dario), Paul Buchheit, Matt Bush, Patrick Collison, Holden Karnofsky, Luke Muehlhauser, and Geoff Ralston for reading drafts of this and the previous post."

Elon Musk re-entered the race in 2023, founding xAI, with the stated goal of “trying to understand the universe.”

This ideology of building AGI to usher the Singularity and Utopia was thus foundational for all these companies. In addition, the leaders of these companies have also stated that they believe in the extreme importance to the future of AGI, and who builds it:

DeepMind co-founder Shane Legg writes in his PhD thesis,

"If our intelligence were to be significantly surpassed, it is difficult to imagine what the consequences of this might be. It would certainly be a source of enormous power, and with enormous power comes enormous responsibility."

DeepMind co-founder Demis Hassabis is quoted by The Guardian as saying that

"he is on a mission to “solve intelligence, and then use that to solve everything else".

OpenAI’s co-founder and CEO Sam Altman wrote in a public OpenAI plan that

"Successfully transitioning to a world with superintelligence is perhaps the most important—and hopeful, and scary—project in human history.

OpenAI co-founder Greg Brockman agrees, writing in a deleted blog post that

"there was one problem that I could imagine happily working on for the rest of my life: moving humanity to safe human-level AI. It’s hard to imagine anything more amazing and positively impactful than successfully creating AI, so long as it’s done in a good way."

Anthropic co-founder and CEO Dario Amodei stated in a recent blog post that building AGI

"[building AGI] is a world worth fighting for. If all of this really does happen over 5 to 10 years—the defeat of most diseases, the growth in biological and cognitive freedom, the lifting of billions of people out of poverty to share in the new technologies, a renaissance of liberal democracy and human rights—I suspect everyone watching it will be surprised by the effect it has on them."

This commitment is reiterated in Anthropic’s Core Views on AI Safety, positing that

"most or all knowledge work may be automatable in the not-too-distant future – this will have profound implications for society, and will also likely change the rate of progress of other technologies as well (an early example of this is how systems like AlphaFold are already speeding up biology today). …it is hard to overstate what a pivotal moment this could be."

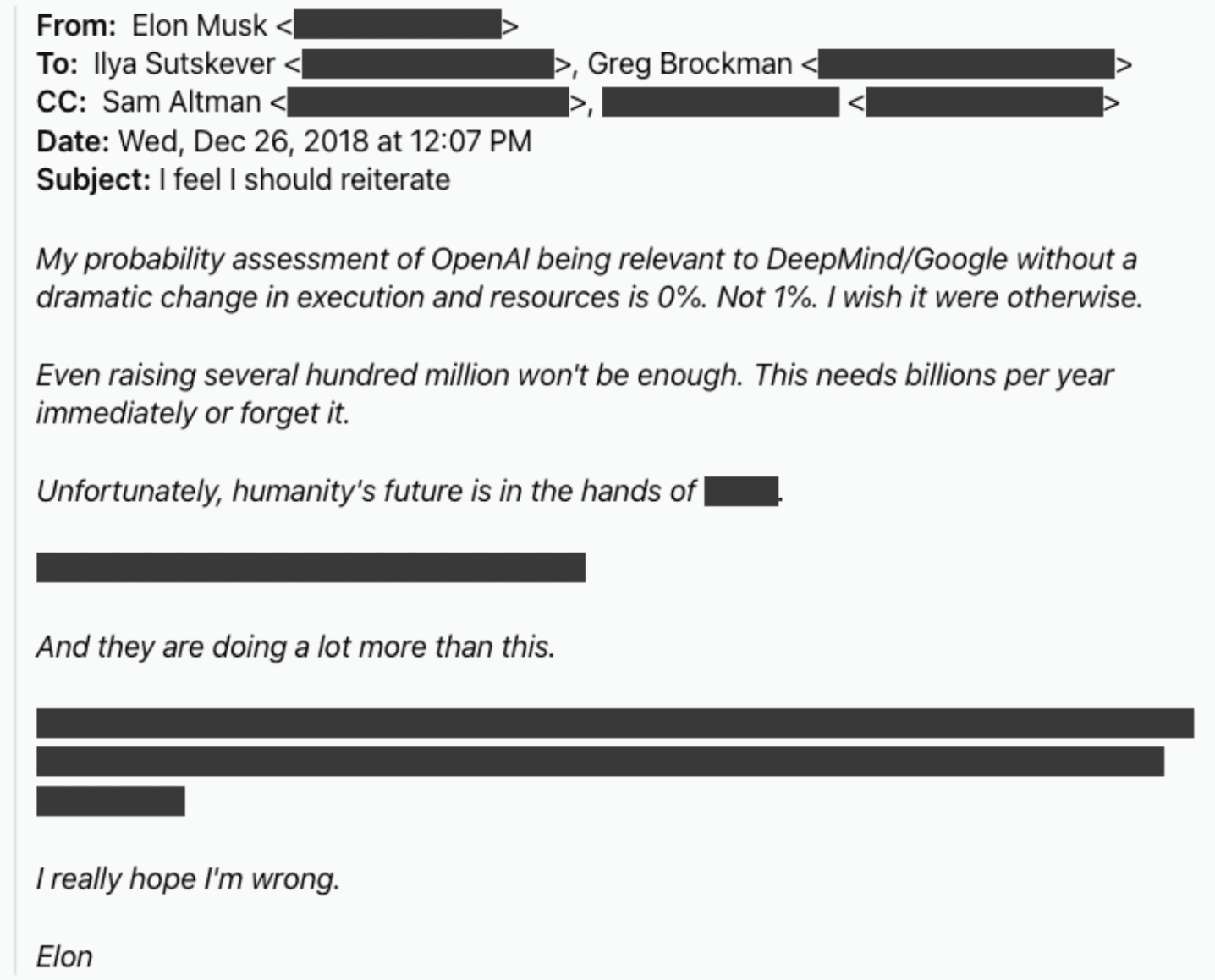

An email to OpenAI founders from Musk most ominously and succinctly summarizes the utopist position: “humanity’s future” is in the hands of whoever wins the race to AGI:

Entente

Aside from racing to build AGI, utopists have also started to tie their own goals (ushering what they see as a good future) with the stated and ideal aims of existing cultures and governments, notably democracy and the US government.

This is true for AGI companies, for example with OpenAI CEO Sam Alman writing in a recent op-ed that:

"There is no third option — and it’s time to decide which path to take. The United States currently has a lead in AI development, but continued leadership is far from guaranteed. Authoritarian governments the world over are willing to spend enormous amounts of money to catch up and ultimately overtake us. Russian dictator Vladimir Putin has darkly warned that the country that wins the AI race will “become the ruler of the world,” and the People’s Republic of China has said that it aims to become the global leader in AI by 2030."

Ex-OpenAI superalignment researcher Leopold Aschenbrenner echoes this sentiment in Situational Awareness:

"Every month of lead will matter for safety too. We face the greatest risks if we are locked in a tight race, democratic allies and authoritarian competitors each racing through the already precarious intelligence explosion at breakneck pace—forced to throw any caution by the wayside, fearing the other getting superintelligence first. Only if we preserve a healthy lead of democratic allies will we have the margin of error for navigating the extraordinarily volatile and dangerous period around the emergence of superintelligence. And only American leadership is a realistic path to developing a nonproliferation regime to avert the risks of self-destruction superintelligence will unfold."

Anthropic CEO Dario Amodei provides the clearest description of this approach, under the name “entente strategy”:

"My current guess at the best way to do this is via an “entente strategy”, in which a coalition of democracies seeks to gain a clear advantage (even just a temporary one) on powerful AI by securing its supply chain, scaling quickly, and blocking or delaying adversaries’ access to key resources like chips and semiconductor equipment. This coalition would on one hand use AI to achieve robust military superiority (the stick) while at the same time offering to distribute the benefits of powerful AI (the carrot) to a wider and wider group of countries in exchange for supporting the coalition’s strategy to promote democracy (this would be a bit analogous to “Atoms for Peace”). The coalition would aim to gain the support of more and more of the world, isolating our worst adversaries and eventually putting them in a position where they are better off taking the same bargain as the rest of the world: give up competing with democracies in order to receive all the benefits and not fight a superior foe.

If we can do all this, we will have a world in which democracies lead on the world stage and have the economic and military strength to avoid being undermined, conquered, or sabotaged by autocracies, and may be able to parlay their AI superiority into a durable advantage. This could optimistically lead to an “eternal 1991”—a world where democracies have the upper hand and Fukuyama’s dreams are realized."

That is, the democracies leading the AGI race (mostly the US) need to rush ahead in order to control the future, leading to an “eternal 1991.”

The more communication-and-policy focused entente strategy has also involved different utopists than AGI companies. Amodei also explicitly credits the think tank RAND with the name “entente strategy” and the rough idea:

"This is the title of a forthcoming paper from RAND, that lays out roughly the strategy I describe."

RAND, an influential think tank created at the end of WWII, has been heavily involved with the Biden administration to draft executive orders on AI. Yet it clearly predates the singularitarians of the early 2000s – AI and AGI were not part of its founding goals; instead, RAND took up these topics after Jason Matheny was appointed CEO in 2022.

This is not the first time Open Philanthropy directly supported utopist actors: In 2017, Open Philanthropy also recommended a grant of $30 million to OpenAI, arguing that

"it’s fairly likely that OpenAI will be an extraordinarily important organization, with far more influence over how things play out than organizations that focus exclusively on risk reduction and do not advance the state of the art."

In the “relationship disclosure” section, Open Philanthropy nods to the conflict of interest:

"OpenAI researchers Dario Amodei and Paul Christiano are both technical advisors to Open Philanthropy and live in the same house as Holden. In addition, Holden is engaged to Dario’s sister Daniela."

In conclusion, for the last 10 years, what has motivated both leading AGI companies and the most powerful non-profits and NGOs working on AI-risks has been the ideology AGI will usher in utopia, and offer massive control of the future to whoever wields it.

Now, the ideology of "AI will grant unlimited power" has taken a nationalistic turn, and started to find its footing in the entente strategy. While entente may look like a new ideology, it's really the same utopists coming up with a slightly different story to justify the race to AGI.

Big Tech: Keeping a hand on the technological frontier

Big Tech companies are used to monopolies and controlling the technological frontier, having dominated the internet, mobile, and cloud computing markets; they’re doing the same with AI, partnering and investing in the utopists’ smaller companies and making them dependent on their extensive resources.

Each of the utopists is backed by at least one of the Big Tech actors:

DeepMind was acquired by Google;

OpenAI is enabled by a cumulative $13 billions of investment from Microsoft and uses their infrastructure;

Anthropic received $4 billion of investment from Amazon, as well as $2 billion from Google;

xAI is enabled by the investments of Elon Musk, who controls the X empire of Tesla, SpaceX, etc.

Big Tech companies play a unique role in the AGI race, bankrolling the utopists and exploiting their progress. They were not founded in order to build AGI, and did not start the AGI race – yet the traction towards AGI eventually got their attention.

For example, Microsoft leadership invested and partnered with OpenAI because it was smelling AI progress passing by, and was unsatisfied with its internal AI teams. Kevin Scott, Microsoft’s CTO, wrote to CEO Satya Nadella and founder Bill Gates in 2019:

"We have very smart ML people in Bing, in the vision team, and in the speech team. But the core deep learning teams within each of these bigger teams are very small, and their ambitions have also been constrained, which means that even as we start to feed them resources, they still have to go through a learning process to scale up. And we are multiple years behind the competition in terms of ML scale."

And Apple, which is historically prone to building in-house rather than buying, recently announced a deal with OpenAI to use ChatGPT in order to power Siri.

Yet as usual in this kind of bargain, Big Tech is starting to reestablish their power and monopoly after depending for a while on the utopists. Not only are they the only one with the necessary resources to enable further scaling, but they also have had access to the technology of utopists and sometimes their teams:

Google literally acquired DeepMind, and has merged it with their previous internal Google Brain, with final control over their research and results.

Microsoft has intellectual property rights to OpenAI code, and the new CEO of Microsoft AI, ex-DeepMind co-founder Mustafa Suleyman, has been reportedly studying the algorithms.

Amazon, despite its partnership with Anthropic, has been assembling a massive internal AI team.

Meta, which never sponsored any utopists, has been consistently building and releasing the most powerful open-weight LLMs, the Llama family of models, for years now.

The utopists are enabled by the compute, scale, funding, and lobbying capacity of their Big Tech backers, who end up dodging public attention in the race to AGI while being one of the main driving forces.

Accelerationists: Idolizing technological progress

Accelerationists believe that technology is an unmitigated good and that we must pursue the change it will bring about as aggressively as possible, eliminating any impediments or regulations. Accelerationists include many VCs, AGI company leaders, and software engineers; key players include Meta and venture capital fund Andreessen Horowitz (a16z).

A representative ode to accelerationism is The Techno-Optimist Manifesto by A16z co-founder Marc Andreessen. It opens by drumming up fervor that technology is what will save society, and critics of technology are what will damn it:

"We are being lied to. / We are told that technology takes our jobs, reduces our wages, increases inequality, threatens our health, ruins the environment, degrades our society, corrupts our children, impairs our humanity, threatens our future, and is ever on the verge of ruining everything. / We are told to be angry, bitter, and resentful about technology. / We are told to be pessimistic. / The myth of Prometheus – in various updated forms like Frankenstein, Oppenheimer, and Terminator – haunts our nightmares. / We are told to denounce our birthright – our intelligence, our control over nature, our ability to build a better world. / We are told to be miserable about the future…

Our civilization was built on technology. / Our civilization is built on technology. / Technology is the glory of human ambition and achievement, the spearhead of progress, and the realization of our potential. / For hundreds of years, we properly glorified this – until recently.

I am here to bring the good news.

We can advance to a far superior way of living, and of being. / We have the tools, the systems, the ideas. / We have the will. / It is time, once again, to raise the technology flag. / It is time to be Techno-Optimists."

While Andreessen is particularly dramatic with his language, accelerationism generally takes the form of pushing for libertarian futures in which risks from technology are managed purely by market forces rather than government regulation. As such, it’s particularly adamant about pushing for open-weight AIs, bringing dangerous technology to every possible criminal, terrorist and dictator in order to “ensure things go well”.

A common argument by accelerationists is that open-source makes software safer by having more eyes explore and inspect the source-code. See Andreessen’s recent post co-authored with Microsoft leadership:

"[Open-Source AIs] also offer the promise of safety and security benefits, since they can be more widely scrutinized for vulnerabilities."

Yet this is profoundly misleading. First, as we articulated before, the AIs released by the likes of Meta and Mistral AI are not open-source: they don’t include everything necessary to train the model, such as the data and the training algorithm. This makes them open-weight, and thus much more opaque to the external user than they are to the internal developer. And even worse than that, recall that modern AIs are grown, not built; this means that even the very researchers who have created these AIs don’t know how they work, and how to fix them if there is a problem. So the main value of open-source software, of having many more eyeballs on the source code to find bugs and security exploits, vanishes because no one who looks at the numbers in a modern AI understands what they mean.

Another argument is simply the proposition that giving everyone a dangerous technology somehow makes it easier for governments and law-enforcement to control it. Indeed, Meta’s CEO Mark Zuckerberg makes this very claim in his defense of his company’s open-weight approach:

"I think it will be better to live in a world where AI is widely deployed so that larger actors can check the power of smaller bad actors."

And as we discussed above, this argument fails to address the asymmetric nature of the danger: it only takes one bad actor succeeding to create chaos, which forces governments and law-enforcement to catch every single threat, without making a single mistake or error. Just because accelerationists decided to give open-weights AI to criminals, terrorists, and rogue nations.

In the end, the goal of accelerationists is simply to avoid regulations on technology at all cost, since they see technology as such an unmitigated good. These beliefs leave no room for a calm and collected reflection of the potential risks of technology, and how to handle them properly.

Zealots: Worshiping superintelligence

The zealots believe AGI to be a superior successor to humanity that is akin to a god; they don’t want to build or control it themselves, but they do want it to arrive, even if humanity is dominated, destroyed, or replaced by it.

Larry Page, co-founder of Google and key advocate of the DeepMind acquisition, is one such zealot. Elon Musk’s biographer detailed Page and Musk’s conflict at a dinner that broke up their friendship (emphasis ours):

"Musk argued that unless we built in safeguards, artificial intelligence systems might replace humans, making our species irrelevant or even extinct.

Page pushed back. Why would it matter, he asked, if machines someday surpassed humans in intelligence, even consciousness? It would simply be the next stage of evolution.

Human consciousness, Musk retorted, was a precious flicker of light in the universe, and we should not let it be extinguished. Page considered that sentimental nonsense. If consciousness could be replicated in a machine, why would that not be just as valuable? Perhaps we might even be able someday to upload our own consciousness into a machine. He accused Musk of being a “specist,” someone who was biased in favor of their own species. “Well, yes, I am pro-human,” Musk responded. “I fucking like humanity, dude.”"

Another is Richard Sutton, one of the fathers of modern Reinforcement Learning, who articulated his own zealotry in his AI Succession presentation:

"We should not resist succession, but embrace and prepare for it

Why would we want greater beings kept subservient?

Why don't we rejoice in their greatness as a symbol and extension of humanity’s greatness, and work together toward a greater and inclusive civilization?"

Another father of AI, Jurgen Schmidhuber, believes that AI will inevitably become more intelligent than humanity:

"In the year 2050 time won’t stop, but we will have AIs who are more intelligent than we are and will see little point in getting stuck to our bit of the biosphere. They will want to move history to the next level and march out to where the resources are. In a couple of million years, they will have colonised the Milky Way.”"

Despite this, he disregards the risks, assuming that this all will happen while humanity plods along. The Guardian reports: “Schmidhuber believes AI will advance to the point where it surpasses human intelligence and has no interest in humans – while humans will continue to benefit and use the tools developed by AI.”

Whether we are replaced by successor species or simply hand over the future to them, zealots believe the coming AI takeover is inevitable and humanity should step out of the way. While the zealots represent a minority in the AGI race, they’ve had massive influence: Page and Musk’s conflict led to Google’s acquisition of DeepMind and thus the creation of OpenAI and, and arguably catalyzed the creation of Anthropic and xAI.

Opportunists: Following the hype

This last group comprises everyone who joined the AGI race and AI industry not because of a particular ideology, but in order to ride the wave of hype and investment, and get money, status, power from it.

This heterogeneous group includes smaller actors in the AI space, such as Mistral AI and Cohere; hardware manufacturers, such as Nvidia and AMD; startups riding the wave of AI progress, such as Perplexity and Cursor; established tech companies integrating AIs into their products, such as Zoom and Zapier; older companies trying to stay relevant to the AI world, such as Cisco and IBM.

Most of the core progress towards AGI has been driven by utopists and Big Tech, not opportunists following the hype. Nonetheless, their crowding into the market has fueled the recent AI boom, and built the infrastructure on which it takes place.

Motivated by their convictions, the utopists are setting the rapid pace of the AGI race despite publicly acknowledging the risks.

Because they believe that AGI is possible and that building it leads to control of the future, they must be the first to protect humanity from the “wrong people” beating them to the punch. Utopists worry about AGI being built by someone naive or incompetent, inadvertently triggering catastrophe; per Ashlee Vance’s biography of Musk, this was Musk’s underlying concern in his disagreement with Larry Page:

"He opened up about the major fear keeping him up at night: namely that Google’s co-founder and CEO Larry Page might well have been building a fleet of artificial-intelligence-enhanced robots capable of destroying mankind. “I’m really worried about this,” Musk said. It didn’t make Musk feel any better that he and Page were very close friends and that he felt Page was fundamentally a well-intentioned person and not Dr. Evil. In fact, that was sort of the problem. Page’s nice-guy nature left him assuming that the machines would forever do our bidding. “I’m not as optimistic,” Musk said. “He could produce something evil by accident.”

Utopists also consider anyone with opposing values to be the wrong people. This includes foreign adversaries such as China and Russia. Sam Altman writes in a recent op-ed that:

"There is no third option — and it’s time to decide which path to take. The United States currently has a lead in AI development, but continued leadership is far from guaranteed. Authoritarian governments the world over are willing to spend enormous amounts of money to catch up and ultimately overtake us. Russian dictator Vladimir Putin has darkly warned that the country that wins the AI race will “become the ruler of the world,” and the People’s Republic of China has said that it aims to become the global leader in AI by 2030."

This is also the main thrust of the “entente strategy”: democracies must race to AGI lest they be overtaken by dictatorships.

This dynamic creates an arms race, where staying in the lead matters more than everything else. This has been a recurring motif throughout the AGI race: OpenAI was built because Elon Musk wanted to overtake DeepMind after Google’s acquisition; Anthropic wanted to outdo OpenAI after the latter made a deal with Microsoft; and xAI is yet another attempt by Elon Musk to get back in the race.

This is why those at the forefront of the AGI race (mainly OpenAI and Anthropic) end up being the people most willing to throw away safety in order to make a move. As a telling example, Anthropic released Claude, which they proudly (and correctly) described as pushing the state-of-the-art, contradicting their own Core Views on AI Safety, which promised “We generally don’t publish this kind of work because we do not wish to advance the rate of AI capabilities progress.”

Over time, anyone willing to slow for safety is weeded out, like OpenAI leaders Jan Leike and Ilya Sutskever from OpenAI’s Superalignment Team. Jan Leike explained his quitting by complaining that he didn’t have enough support and resources for doing his work on safety:

"Over the past few months my team has been sailing against the wind. Sometimes we were struggling for compute and it was getting harder and harder to get this crucial research done.

[...]

But over the past years, safety culture and processes have taken a backseat to shiny products.”

The tendency to rationalize away the risks is best captured by Situational Awareness, a report written by an ex-OpenAI employee, Leopold Aschenbrenner. He articulates his concern about future risks and the inadequate mediation measures:

"We’re not on track for a sane chain of command to make any of these insanely high-stakes decisions, to insist on the very-high-confidence appropriate for superintelligence, to make the hard decisions to take extra time before launching the next training run to get safety right or dedicate a large majority of compute to alignment research, to recognize danger ahead and avert it rather than crashing right into it. Right now, no lab has demonstrated much of a willingness to make any costly tradeoffs to get safety right (we get lots of safety committees, yes, but those are pretty meaningless). By default, we’ll probably stumble into the intelligence explosion and have gone through a few OOMs before people even realize what we’ve gotten into.

We’re counting way too much on luck here."

On the very next page, he then contends that (emphasis ours):

"Every month of lead will matter for safety too. We face the greatest risks if we are locked in a tight race, democratic allies and authoritarian competitors each racing through the already precarious intelligence explosion at breakneck pace—forced to throw any caution by the wayside, fearing the other getting superintelligence first. Only if we preserve a healthy lead of democratic allies will we have the margin of error for navigating the extraordinarily volatile and dangerous period around the emergence of superintelligence. And only American leadership is a realistic path to developing a nonproliferation regime to avert the risks of self-destruction superintelligence will unfold."

This is the gist of the AGI race: fear makes the very people worried about risks from AGI race even faster and deprioritize safety, arguing that they will eventually stop and do things correctly when they finally feel safe. Ironically, this very attitude creates more competitors, more people racing for AGI, and ensures it truly is a race to the bottom..

Utopists are the core drivers of the race to AGI, but the ideology of every other group adds fuel to the fire. Big Tech is investing billions in order to capture this powerful new technology on the horizon; accelerationists spread AIs and AI research everywhere and undermine any regulation, bringing about the worst nightmare of the utopists and making them race even faster; zealots praise the coming of godly AIs; and opportunists enmesh themselves with the whole race to AGI, increasing the attention, funding, infrastructure and support it gets.

The above analysis clarifies what is happening: since the utopists actually want to build AGI, and that they’re the ones most willing to throw safety under the bus, they are simply using the industry playbook of Big Tech, Big Oil, Big Tobacco, etc., to reach their goal, while pretending to champion safety.

The Industry Playbook

At its core, the industry playbook is about getting obstacles out of one’s way, be they competitors, the public, or the government.

The main strategy is FUD, for Fear, Uncertainty and Doubt: it helps anyone who benefits from the status quo (usually “inaction”), when they need to fend off something menacing this status quo.

For example, an industry sells a product (tobacco, asbestos, social media, etc.) that harms people, and some are starting to notice and make noise about it. Denying the harms may be impossible or could draw more attention to them, so industrial actors opt to spread confusion instead: they accuse others of lying or being in the pockets of shadowy adversaries, drown the public in tons of junk data that is hard to evaluate, bore people with minutia to redirect public attention, or argue that the science is nascent, controversial, or premature. In short, they waste as much time and energy of their opponents and the general public as possible.

This delays countermeasures and buys the incumbent time. And, if they’re lucky, it might be enough to outlast the underfunded pro-civil adversaries as they run out of funding or public attention.

The canonical example of FUD comes from tobacco: as documented in a history of tobacco industry tactics, John W. Hill, the president of the leading public relations firm at the time, published his recommendations in 1953 as experts began to understand the dangers of smoking (emphasis ours):

"So he proposed seizing and controlling science rather than avoiding it. If science posed the principal—even terminal—threat to the industry, Hill advised that the companies should now associate themselves as great supporters of science. The companies, in his view, should embrace a sophisticated scientific discourse; they should demand more science, not less.

Of critical importance, Hill argued, they should declare the positive value of scientific skepticism of science itself. Knowledge, Hill understood, was hard won and uncertain, and there would always be skeptics. What better strategy than to identify, solicit, support, and amplify the views of skeptics of the causal relationship between smoking and disease? Moreover, the liberal disbursement of tobacco industry research funding to academic scientists could draw new skeptics into the fold. The goal, according to Hill, would be to build and broadcast a major scientific controversy. The public must get the message that the issue of the health effects of smoking remains an open question. Doubt, uncertainty, and the truism that there is more to know would become the industry's collective new mantra."

FUD is so effective the CIA recommended it to intelligence operatives in WWII – the Simple Sabotage Field Manual abounds with tactics that are meant to slow down, exhaust, and confuse, while maintaining plausible deniability:

FUD also encourages and exploits the industry funding of scientific research. A recent review of the influence of industry funding on research describes the practice (emphasis ours):

"Qualitative and quantitative studies included in our review suggest that industry also used research funding as a strategy to reshape fields of research through the prioritization of topics that supported its policy and legal positions, while distracting from research that could be unfavorable. Analysis of internal industry documents provides insight into how and why industry influenced research agendas. It is particularly interesting to note how corporations adopted similar techniques across different industry sectors (i.e., tobacco, alcohol, sugar, and mining) and fields of research. The strategies included establishing research agendas within the industry that were favorable to its positions, strategically funding research along these lines in a way that appeared scientifically credible, and disseminating these research agendas by creating collaborations with prominent institutions and researchers."

That being said, FUD only works because the default path is to preserve the risky product; it would be ineffective if the default strategy was instead to wait for scientific consensus to declare the product safe.

To avoid this, the industry playbook encourages self-regulation. If the industry must regulate itself, then it cannot act until something bad happens, and then when it happens they actively FUD to outrun regulation forever.

Facebook has followed this playbook, repeatedly pushing against government regulation, as demonstrated in their interactions with the European Commission in 2017 (emphasis ours):

"In January 2017, Facebook referred only to its terms of service when explaining decisions on whether or not to remove content, the documents show. “Facebook explained that referring to the terms of services allows faster action but are open to consider changes,” a Commission summary report from then reads.

“Facebook considers there are two sets of laws: private law (Facebook community standards) and public law (defined by governments),” the company told the Commission, according to Commission minutes of an April 2017 meeting.

“Facebook discouraged regulation,” reads a Commission memo summarizing a September 2017 meeting with the company.

The decision to press forward with the argument is unusual, said Margarida Silva, a researcher and campaigner at Corporate Europe Observatory. “You don’t see that many companies so openly asking for self-regulation, even going to the extent of defending private law.”

Facebook says it has taken the Commission’s concerns into account. “When people sign up to our terms of service, they commit to not sharing anything that breaks these policies, but also any content that is unlawful,” the company told POLITICO. “When governments or law enforcement believe that something on Facebook violates their laws, even if it doesn’t violate our standards, they may contact us to restrict access to that content.”"

Unsurprisingly, this did not work, as a recent FTC report shows:

"A new Federal Trade Commission staff report that examines the data collection and use practices of major social media and video streaming services shows they engaged in vast surveillance of consumers in order to monetize their personal information while failing to adequately protect users online, especially children and teens."

Last but not least, FUD itself, particularly fear, can help with defending self-regulation. This is the standard technique used by industries to get governments off their backs: scream that regulating them will destroy the country’s competitiveness and allow other countries (maybe even the enemy of the time, then USSR, now China) to catch up.

This is visible for example in the unified front by big tech against the new head of F.T.C. Lina Khan, who vowed to curb monopolies through regulation and antitrust law.

What’s most tricky about the industry playbook in general, and FUD in particular, is that individual actions can always be made sensible and reasonable on the surface — they push for and worry about science, innovation, consumers, competitiveness, and all the other sacred words of modernity. They sound reasonable, and almost civic-minded. And this makes criticizing them even harder, because pointing at any single action fails to unearth the strategy.

If we come back to what the utopists and their allies have been doing, we see the same story: each action can independently be justified one way or the other. Yet taking them together reveals a general pattern of systematically undermining safety and regulation, notably through:

Spreading confusion through misinformation and double-speak

Exploiting fear of AI risks to accelerate even further

Capturing and neutralizing both regulation and AI safety efforts

Spreading confusion through misinformation and double-speak

It should be cause for concern that the utopists are willing to spread misinformation, and go back on their commitments, and outright lie to stay on the frontline of the race.

An egregious recent example is how, in his opening remarks at a Senate hearing, OpenAI CEO’s Sam Altman deliberately contradicted his past position to avoid a difficult conversation about AI x-risks with Senator Blumenthal, who quoted his Machine Intelligence blog post:

"You have said ‘development of superhuman machine intelligence is probably the greatest threat to the continued existence of humanity’; you may have had in mind the effect on jobs, which is really my biggest nightmare in the long term."

Altman then responds:

"Like with all technological revolutions, I expect there to be significant impact on jobs, but exactly what that impact looks like is very difficult to predict."

This is misdirecting attention from what Altman has historically written. The original text of Altman’s blog post reads:

"The development of superhuman machine intelligence is probably the greatest threat to the continued existence of humanity. There are other threats that I think are more certain to happen (for example, an engineered virus with a long incubation period and a high mortality rate) but are unlikely to destroy every human in the universe in the way that SMI [Superhuman Machine Intelligence] could."

Altman’s response distorts the meaning of his original post, and instead runs with the misunderstanding of Blumenthal.

Similarly, in his more recent writing Altman has pulled back from his previous position on AGI risks and continues to downplay the risks by only addressing AI’s impact to the labor market:

"As we have seen with other technologies, there will also be downsides, and we need to start working now to maximize AI’s benefits while minimizing its harms. As one example, we expect that this technology can cause a significant change in labor markets (good and bad) in the coming years, but most jobs will change more slowly than most people think, and I have no fear that we’ll run out of things to do (even if they don’t look like “real jobs” to us today)."

Altman simply changed his tune whenever it helped him, shifting from extinction risk to labor, claiming this was what he meant all along.

Anthropic has also explicitly raced and pushed the state-of-the-art after reassuring everyone that they would prioritize safety. Historically, Anthropic asserted that their focus on safety means that they wouldn’t advance the frontier of capabilities:

"We generally don’t publish this kind of work because we do not wish to advance the rate of AI capabilities progress. In addition, we aim to be thoughtful about demonstrations of frontier capabilities (even without publication)."

Yet they released the Claude 3 family of models, noting themselves that:

"Opus, our most intelligent model, outperforms its peers on most of the common evaluation benchmarks for AI systems, including undergraduate level expert knowledge (MMLU), graduate level expert reasoning (GPQA), basic mathematics (GSM8K), and more. It exhibits near-human levels of comprehension and fluency on complex tasks, leading the frontier of general intelligence."

Anthropic’s leaked pitch deck in 2023 is another example, with the AGI company stating that it plans to build models “orders of magnitude” larger than competitors. They write: “These models could begin to automate large portions of the economy,” and “we believe that companies that train the best 2025/26 models will be too far ahead for anyone to catch up in subsequent cycles.” These statements evidence that despite arguments about restraint and safety, Anthropic is just as motivated to race for AGI as their peers.

DeepMind CEO Demis Hassabis has been similarly inconsistent, arguing that AGI risks are legitimate and demanding regulation and a slowdown, while simultaneously leading DeepMind’s effort to catch up to ChatGPT and Claude. Elon Musk has also consistently argued that AI poses serious risks, despite being the driving force between both OpenAI and xAI.

These contradictory actions lead to huge public confusion, with some onlookers even arguing that concerns about x-risk are a commercial hype strategy. But the reality is the opposite: the utopists understand that AGI will be such an incredibly powerful technology that they are willing to cut corners to reach it first. Today, even if these actors can signal concern, history gives us no reason to believe they would ever prioritize safety over racing.

Turning care into acceleration

Recall that uncertainty and fear are essential components of FUD, and the whole industry playbook: by emphasizing uncertainty on one hand, and fear on the other, the status quo can be maintained.

In this case, utopists have managed to turn both the uncertainty about the details of AGI risks, and the fear of it being done by the “wrong” people, into two more excuses to maintain the status quo of racing as fast as possible.

First, they have been leveraging the uncertainty around the risks from AI as an argument to race to AGI, just so it’s possible to iterate and be empirical about them:

OpenAI’s Planning for AGI argues that because it’s hard to anticipate AI capabilities, it’s best to move fast and keep iterating and releasing models:

“We currently believe the best way to successfully navigate AI deployment challenges is with a tight feedback loop of rapid learning and careful iteration. Society will face major questions about what AI systems are allowed to do, how to combat bias, how to deal with job displacement, and more. The optimal decisions will depend on the path the technology takes, and like any new field, most expert predictions have been wrong so far. This makes planning in a vacuum very difficult.”

Similarly, Anthropic’s Core Views on Safety argues that safety is a reason to race to (or past) the capabilities frontier:

“Unfortunately, if empirical safety research requires large models, that forces us to confront a difficult trade-off. We must make every effort to avoid a scenario in which safety-motivated research accelerates the deployment of dangerous technologies. But we also cannot let excessive caution make it so that the most safety-conscious research efforts only ever engage with systems that are far behind the frontier, thereby dramatically slowing down what we see as vital research. Furthermore, we think that in practice, doing safety research isn’t enough – it’s also important to build an organization with the institutional knowledge to integrate the latest safety research into real systems as quickly as possible.”

Elon Musk has used this argument too, justifying his creation of xAI with the claim that the only way to make AGI “good” was to be a participant:

“I’ve really struggled with this AGI thing for a long time and I’ve been somewhat resistant to making it happen,” he said. “But it really seems that at this point it looks like AGI is going to happen so there’s two choices, either be a spectator or a participant. As a spectator, one can’t do much to influence the outcome.”

Then, fear of the wrong people developing AGI, have been exploited as a further reason to race even faster:

This is the gist of the “entente strategy” proposed by Anthropic CEO Dario Amodei and endorsed by many influential members of Effective Altruism, such as think tank RAND, discussed above, as well as the language Sam Altman is increasingly using to stoke nation-state competition:

"That is the urgent question of our time. The rapid progress being made on artificial intelligence means that we face a strategic choice about what kind of world we are going to live in: Will it be one in which the United States and allied nations advance a global AI that spreads the technology’s benefits and opens access to it, or an authoritarian one, in which nations or movements that don’t share our values use AI to cement and expand their power?"

And in one of the most egregious cases, Leopold Aschenbrenner’s Situational Awareness, which captures much of the utopists’ view, argues that racing and abandoning safety is the only way to ensure that noble actors win, and can then take the time to proceed thoughtfully:

“Only if we preserve a healthy lead of democratic allies will we have the margin of error for navigating the extraordinarily volatile and dangerous period around the emergence of superintelligence. And only American leadership is a realistic path to developing a nonproliferation regime to avert the risks of self-destruction superintelligence will unfold.”

This has the same smell as Big Tobacco reinforcing the uncertainty of science as a way to sell products that kill people, and Big Tech stoking the fear of losing innovation as a way to ensure they can keep their monopolies and kill competition. These are not thoughtful arguments that examine the pros and cons and end up deciding to race; these are instead rationalizations of the desire and decision to race, playing on sacred notions like science and democracy to get what they want.

Capturing and neutralizing regulation and research

The AGI race has also seen systematic (and mostly successful) attempts to capture and neutralize the two forces that might slow down the race: AI regulation and AI safety research.

Capturing AI regulation

Utopists consistently undermine regulation attempts, emphasizing the risks of slowing or stopping AI development by appealing to geopolitical tensions or suggesting that the regulation is premature and will stifle innovation.

OpenAI, Google, and Anthropic all opposed core provisions of SB 1047, one of the few recent proposals that could have effectively regulated AI, emphasizing the alleged costs to competitiveness and that the industry is too nascent. OpenAI argued that this kind of legislation should happen at the federal level, not at the state level, and thus opposed the law because it might be unproductive:

"However, the broad and significant implications of AI for U.S. competitiveness and national security require that regulation of frontier models be shaped and implemented at the federal level. A federally-driven set of AI policies, rather than a patchwork of state laws, will foster innovation and position the U.S. to lead the development of global standards. As a result, we join other AI labs, developers, experts and members of California’s Congressional delegation in respectfully opposing SB 1047."

"What is needed in such a new environment is iteration and experimentation, not prescriptive enforcement. There is a substantial risk that the bill and state agencies will simply be wrong about what is actually effective in preventing catastrophic risk, leading to ineffective and/or burdensome compliance requirements."

These arguments seem credible and well-intentioned on their own — there are indeed geopolitical tensions, and we should design effective legislation.

However, considered in concert, this is another FUD tactic: instead of advocating for stronger, more effective and sensible legislation, or brokering international agreements to limit geopolitical tensions, the utopists suggest self-regulation that keeps them in control. Most have published frameworks for the evaluation of existential risks: Anthropic’s Responsible Scaling Policy, OpenAI’s Preparedness Framework, and DeepMind’s Frontier Safety Framework.

The details of each of these policies are irrelevant, because at no point do they attempt or focus on enforcement by governments. Even worse, the documents clearly state that the AGI companies can and will edit the conditions and constraints for future AIs, however they see fit. Indeed, Anthropic hasn’t missed the chance of introducing a backdoor to racing even faster if they’re not in the lead anymore:

"It is possible at some point in the future that another actor in the frontier AI ecosystem will pass, or be on track to imminently pass, a Capability Threshold without implementing measures equivalent to the Required Safeguards such that their actions pose a serious risk for the world. In such a scenario, because the incremental increase in risk attributable to us would be small, we might decide to lower the Required Safeguards. If we take this measure, however, we will also acknowledge the overall level of risk posed by AI systems (including ours), and will invest significantly in making a case to the U.S. government for taking regulatory action to mitigate such risk to acceptable levels."

The utopists are exploiting fear of China and bad legislation to retain control over regulation, in turn devising proposals that let them modify boundaries as they approach them. To understand what type of regulation these companies really believe in, we can just track their actions: polite talk in public, while lobbying hard against regulation behind the scene, such as what OpenAI did for the EU AI Act.

Both Big Tech and accelerationists actors are supporting the utopists in their push against any kind of binding AI regulation. Indeed, Microsoft, who bankrolls OpenAI, and Andreessen-Horowitz, who position themselves as champions of little tech and open-source, recently co-authored a public post arguing:

"As the new global competition in AI evolves, laws and regulations that mitigate AI harm should focus on the risk of bad actors misusing AI and aim to avoid creating new barriers to business formation, growth, and innovation."

As discussed in Section 5, the impacts of these ideological actors on the policy space have been widespread, and now, unenforced reactive frameworks have become the primary governance strategy endorsed by governments and AI governance actors. For those familiar with the past two decades of Big Tech’s anti-regulation approaches, this is nothing new.

Capturing Safety Research

The utopists have also found two ways to capture safety research: constraining “safety” and “alignment” work to research that can’t impede the race, and controlling the funding landscape.

OpenAI managed to redefine safety and alignment from “doesn’t endanger humanity” to “doesn’t produce anything racist or illegal.” The 2016 OpenAI Charter referenced AGI in its discussion of safety:

"OpenAI’s mission is to ensure that artificial general intelligence (AGI)—by which we mean highly autonomous systems that outperform humans at most economically valuable work—benefits all of humanity. We will attempt to directly build safe and beneficial AGI, but will also consider our mission fulfilled if our work aids others to achieve this outcome."

The May 2024 OpenAI Safety Update strikes a different tone:

"Our models have become significantly safer over time. This can be attributed to building smarter models which typically make fewer factual errors and are less likely to output harmful content even under adversarial conditions like jailbreaks."

Anthropic has similarly maneuvered to define safety as is convenient, focusing its alignment and safety research efforts on areas that do not actually limit racing but instead provide an edge. These include:

Mechanistic interpretability, which tries to reverse-engineer AIs to understand how they work, which can then be used to advance and race even faster.

Scalable oversight, which is another term for whack-a-mole approaches where the current issues are incrementally “fixed” by training them away. This incentivizes obscuring issues rather than resolving them. It also helps Anthropic build chatbots, providing a steady revenue stream.

Evaluations, which test LLMs for dangerous capabilities. But Anthropic ultimately decides what is cause for slowdown, and their commitments are voluntary.

These approaches lead to a strategy that aims to use superintelligent AI to solve the hardest problems of AI safety, which, naturally, is an argument that is then used to justify racing to build AGI. OpenAI, Deepmind, Anthropic, X.AI (“accelerating human scientific discovery”), and others have all proposed deferring and outsourcing questions of AI safety to more advanced AI systems.

These opinions have matriculated into the field of technical AI safety and now make up the majority of research efforts, largely because the entire funding landscape is controlled by utopists.

Outside of AGI companies, the main source of funding has been the Effective Altruism community, which has pushed for the troubling entente strategy and endorsed self-regulation through its main founding organ, Open Philanthropy.

In this situation, it is expected that none of the “AI safety” actors are really pushing against the race, nor paying the costs of alignment.

Because utopists are driven to be the first to build AGI to control the future, they intentionally downplay the risks and neutralize any potential regulatory obstacles. Their behavior, and the secondary motions of Big Tech players and accelerationists, further accelerating the race: everybody is trying to get ahead.

The race runs the risk of morphing from commercial to political as governments become increasingly convinced that AGI is a matter of national security and supremacy. Government intervention could override market forces and unlock significantly more funding, heightening geopolitical tensions. Political actors may be motivated to race out of fear that a competitor can deploy AGI that neutralizes all other parties.

This transition might already be in motion. The US government recently toyed with the idea of establishing national labs on AI:

"The new approach won’t propose the “Manhattan Project for AI” that some have urged. But it should offer a platform for public-private partnerships and testing that could be likened to a national laboratory, a bit like Lawrence Livermore in Berkeley, Calif., or Los Alamos in New Mexico. For the National Security Council officials drafting the memo, the core idea is to drive AI-linked innovation across the U.S. economy and government, while also anticipating and preventing threats to public safety."

And then, the US government started collaborating with AGI companies to harness the power of AI for national security:

"The National Security Memorandum (NSM) is designed to galvanize federal government adoption of AI to advance the national security mission, including by ensuring that such adoption reflects democratic values and protects human rights, civil rights, civil liberties and privacy. In addition, the NSM seeks to shape international norms around AI use to reflect those same democratic values, and directs actions to track and counter adversary development and use of AI for national security purposes."

This is yet another sign that the AI industry is gearing itself up to race even faster, not pivot toward safety.